Dosya:Cretaceous 90ma co2 900 annual temperature 2.png

Tam çözünürlük ((2.240 × 1.488 piksel, dosya boyutu: 1,31 MB, MIME tipi: image/png))

Bu dosya Wikimedia Commons'ta bulunmaktadır. Dosyanın açıklaması aşağıda gösterilmiştir. Commons, serbest/özgür telifli medya dosyalarının bulundurulduğu depodur. Siz de yardım edebilirsiniz. |

Özet

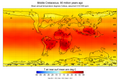

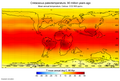

| AçıklamaCretaceous 90ma co2 900 annual temperature 2.png |

English: Cretaceous annual temperature in Celcius, 90 million years ago.

Exoplaism simulation. Vegetation, wet soil and ice. CO2 900 ppmv. Simuilated TMean in world 23,43 Celcius. |

| Tarih | |

| Kaynak | Yükleyenin kendi çalışması |

| Yazar | Merikanto |

This temperature map is generated with Exoplasim python script, variable tsa, day 180, second year.

Map is based on Scotese 180 paleodems,

https://www.earthbyte.org/paleodem-resource-scotese-and-wright-2018/

PaleoDEM Resource – Scotese and Wright (2018)

11 August, 2018 by Sabin Zahirovic

Download PaleoDEM rasters as a zip file from here:

https://zenodo.org/record/5460860

August 1, 2018 Dataset Open Access

PALEOMAP Paleodigital Elevation Models (PaleoDEMS) for the Phanerozoic

Scotese, Christopher R; Wright, Nicky M

For more information about this resource, contact Christopher R. Scotese at cscotese@gmail.com.

Must install some stuff to Ubuntu

- install plasim deps

sudo apt update -y

sudo apt install -y openjdk-17-jre

sudo apt install -y --reinstall firefox

sudo apt install -y f2c

sudo apt install -y fftw3

sudo apt install -y openmpi-bin libopenmpi-dev

sudo apt install -y python3-pip

sudo apt install -y python-is-python3

sudo apt install -y python-numpy

pip3 install netcdf4

pip3 install exoplasim[netCDF4]

Expoplasim code:

Note:

Try install exoplasim code directly from git source, instead from pip wheel.

Exoplasim code can crash easily. If you use only basic modules to run exoplasim code, it crashes not so often: not ice etc.

- Ecoplasim planet running code

- exoplasim example

- in ra

- convert to T21, input netcdf

- load one lon, lat, z grid

- or Tarasov glac1d grid

- 25.12.2021 0000.0004b

-

- MPI NOTE: if you use more than

- one processor, you cannot in most cases run MPI in root

- in ubuntu you must install

-

- pip3 install exoplasim[netCDF4]

- not

- "sudo pip3 install exoplasim[netCDF4]"

import numpy as np

import matplotlib.pyplot as plt

from scipy.interpolate import interp2d

import netCDF4

import exoplasim as exo

NLAT=0

NLON=0

def writeSRA(name,kcode,field,NLAT,NLON):

label=name+'_surf_%04d.sra'%kcode

header=[kcode,0,20170927,0,NLON,NLAT,0,0]

fmap = field.reshape((int(NLAT*NLON/8),8))

sheader =

for h in header:

sheader+=" %11d"%h

lines=[]

i=0

while i<NLAT*NLON/8:

l=

for n in fmap[i,:]:

l+=' %9.3f'%n

lines.append(l)

i+=1

text=sheader+'\n'+'\n'.join(lines)+'\n'

f=open(label,'w')

f.write(text)

f.close()

print (label)

def writeSRA2(label,kcode,field,NLAT,NLON):

#label=name+'_surf_%04d.sra'%kcode

header=[kcode,0,20170927,0,NLON,NLAT,0,0]

fmap = field.reshape((int(NLAT*NLON/8),8))

sheader =

for h in header:

sheader+=" %11d"%h

lines=[]

i=0

while i<NLAT*NLON/8:

l=

for n in fmap[i,:]:

l+=' %9.3f'%n

lines.append(l)

i+=1

text=sheader+'\n'+'\n'.join(lines)+'\n'

f=open(label,'w')

f.write(text)

f.close()

print (label)

def savenetcdf_single_frommem(outfilename1, outvarname1, xoutvalue1,xoutlats1,xoutlons1):

nlat1=len(xoutlats1)

nlon1=len(xoutlons1)

#indata_set1=indata1

print(outfilename1)

ncout1 = netCDF4.Dataset(outfilename1, 'w', format='NETCDF4')

outlat1 = ncout1.createDimension('lat', nlat1)

outlon1 = ncout1.createDimension('lon', nlon1)

outlats1 = ncout1.createVariable('lat', 'f4', ('lat',))

outlons1 = ncout1.createVariable('lon', 'f4', ('lon',))

outvalue1 = ncout1.createVariable(outvarname1, 'f4', ('lat', 'lon',))

outvalue1.units = 'Unknown'

outlats1[:] = xoutlats1

outlons1[:] = xoutlons1

outvalue1[:, :] =xoutvalue1[:]

ncout1.close()

return 0

def loadnetcdf_single_tomem(infilename1, invarname1):

global cache_lons1

global cache_lats1

print(infilename1)

inc1 = netCDF4.Dataset(infilename1)

inlatname1="lat"

inlonname1="lon"

inlats1=inc1[inlatname1][:]

inlons1=inc1[inlonname1][:]

cache_lons1=inlons1

cache_lats1=inlats1

indata1_set1 = inc1[invarname1][:]

dim1=indata1_set1.shape

nlat1=dim1[0]

nlon1=dim1[1]

inc1.close()

return (indata1_set1)

def create_sras(topo):

global NLAT

global NLON

topo2=topo

masko=topo

topo2[topo2 < 1] = 0

masko[masko < 1] = 0

masko[masko > 0] = 1

grid=np.flipud(masko)

name="Example"

writeSRA(name,129,topo,NLAT,NLON)

writeSRA(name,172,grid,NLAT,NLON)

writeSRA2("topo.sra",129,topo2,NLAT,NLON)

writeSRA2("landmask.sra",172,grid,NLAT,NLON)

return(0)

def convert_to_t21(infilename1, outfilename1):

global NLAT

global NLON

indimx=361

indimy=181

#indimx=360

#indimy=360

## t21 64x32

shapex=64

shapey=32

NLAT=shapex

NLON=shapey

nc = netCDF4.Dataset(infilename1)

inlats=nc['lat'][:]

inlons=nc['lon'][:]

#print(inlats)

#print(inlons)

latlen=len(inlats)

lonlen=len(inlons)

#print(lonlen, latlen)

indimx=lonlen

indimy=latlen

dem=nc['z']

#dem=np.flipud(dem000)

dem2=np.copy(dem)

#dem2[dem2 < 0] = 0

#plt.imshow(dem,cmap='gist_earth')

#plt.imshow(dem2,cmap='gist_earth')

#plt.show()

#quit(0)

lts=[85.7606, 80.2688, 74.7445, 69.2130, 63.6786, 58.1430, 52.6065, 47.0696,

41.5325,35.9951, 30.4576, 24.9199, 19.3822, 13.8445, 8.3067, 2.7689,

-2.7689, -8.3067, -13.8445, -19.3822, -24.9199, -30.4576, -35.9951, -41.5325,

-47.0696, -52.6065, -58.1430, -63.6786, -69.2130, -74.7445, -80.2688, -85.7606]

##

lns=[0, 5.6250, 11.2500, 16.8750, 22.5000, 28.1250, 33.7500 ,39.3750,

45.0000, 50.6250, 56.2500, 61.8750, 67.5000, 73.1250, 78.7500, 84.3750,

90.0000, 95.6250, 101.2500, 106.8750, 112.5000, 118.1250, 123.7500, 129.3750,

135.0000, 140.6250, 146.2500, 151.8750, 157.5000, 163.1250, 168.7500, 174.3750,

180.0000, 185.6250, 191.2500, 196.8750, 202.5000, 208.1250, 213.7500, 219.3750,

225.0000, 230.6250, 236.2500, 241.8750, 247.5000, 253.1250, 258.7500, 264.3750,

270.0000, 275.6250, 281.2500, 286.8750, 292.5000, 298.1250, 303.7500, 309.3750,

315.0000, 320.6250, 326.2500, 331.8750, 337.5000, 343.1250, 348.7500, 354.3750]

ly2=len(lts)

lx2=len(lns)

shapex=lx2

shapey=ly2

#print("sheip")

#print(shapex, shapey)

lons, lats = np.meshgrid(lns,lts)

#print (lts)

#print (lns)

new_W, new_H = (shapey,shapex)

xrange = lambda x: np.linspace(0, 360, x)

f2 = interp2d(xrange(indimx), xrange(indimy), dem2, kind="linear")

#f2 = interp2d(range(indimx), range(indimy), dem2, kind="cubic")

demo = f2(xrange(shapex), xrange(shapey))

#plt.imshow(demo)

#plt.show()

#quit(0)

f3 = interp2d(xrange(indimx), xrange(indimy), dem2, kind="linear")

#masko = f3(xrange(shapex), xrange(shapey))

#topo=np.flipud(demo)

topo=demo

#grid=np.fliplr(masko)

#def savenetcdf_single_frommem(outfilename1, outvarname1, xoutvalue1,xoutlats1,xoutlons1):

savenetcdf_single_frommem(outfilename1, "z", topo,lts,lns)

return(topo,lons,lats)

def load_glac1d_dem(indatafile, outdatafile, a_yr):

# load dem from Tarsaov GLAC1d anno domini 2021

global NLAT

global NLON

yr=a_yr

lok=int(abs(yr/100-260))

# tarasov ice 26k

nc = netCDF4.Dataset(indatafile1)

#print(nc)

eisbase=nc['ICEM']

inlats=nc['YLATGLOBP5'][:]

inlons=nc['XLONGLOB1'][:]

dem=nc['HDCB'][lok]

#dem=np.flipud(dem000)

#print (dem)

#print (np.shape(dem))

#plt.imshow(dem,cmap='gist_earth')

savenetcdf_single_frommem(outdatafile, "z",dem,inlats,inlons)

return(0)

- maybe nok

def convert_to_t42(infilename1, outfilename1):

## ONLY attempi! to create T42!

global NLAT

global NLON

indimx=361

indimy=181

## t42 64x32

#shapex=64

#shapey=32

shapex=128

shapey=64

#shapey=63

NLAT=shapex

NLON=shapey

nc = netCDF4.Dataset(infilename1)

inlats=nc['lat'][:]

inlons=nc['lon'][:]

latlen=len(inlats)

lonlen=len(inlons)

indimx=lonlen

indimy=latlen

dem=nc['z']

#dem=np.flipud(dem000)

dem2=np.copy(dem)

## test t21

tdx=360.0/shapex

#tdy=180.0/shapey

tdy=(90.0-85.706)/2

minix=0.0

maksix=360-tdx

maksiy=90-tdy

miniy=-90+tdy

#print(90-tdy)

#

#print(miniy)

#print(maksiy)

#quit(-1)

#lns=np.linspace(minix, maksix, num=shapex)

#lts=np.linspace(maksiy, miniy, num=shapey)

## jn WARNING 90!

lts=[87.8638, 85.0965 ,82.3129, 79.5256, 76.7369 ,73.9475 ,71.1578, 68.3678, #ok

65.5776, 62.7874, 59.9970 ,57.2066, 54.4162, 51.6257, 48.8352, 46.0447,

43.2542, 40.4636, 37.6731 ,34.8825, 32.0919, 29.3014, 26.5108, 23.7202,

20.9296, 18.1390, 15.3484 ,12.5578, 9.7671, 6.9765, 4.1859, 1.3953,

-1.3953, -4.1859, -6.9765, -9.7671, -12.5578, -15.3484, -18.1390, -20.9296,

-23.7202,-26.5108, -29.3014 ,-32.0919, -34.8825, -37.6731, -40.4636,-43.2542,

-46.0447,-48.8352, -51.6257, -54.4162, -57.2066, -59.9970, -62.7874, -65.5776,

-68.3678,-71.1578 ,-73.9475, -76.7369 ,-79.5256, -82.3129, -85.0965, -87.8638]

lns=[0.0000 ,2.8125, 5.6250, 8.4375, 11.2500, 14.0625 ,16.8750 ,19.6875,

22.5000,25.3125, 28.1250, 30.9375 ,33.7500,36.5625 ,39.3750, 42.1875,

45.0000,47.8125, 50.6250, 53.4375, 56.2500, 59.0625 ,61.8750, 64.6875,

67.5000, 70.3125, 73.1250, 75.9375, 78.7500, 81.5625, 84.3750, 87.1875,

90.0000, 92.8125, 95.6250 ,98.4375 ,101.2500, 104.0625, 106.8750, 109.6875,

112.5000, 115.3125, 118.1250, 120.9375,123.7500 ,126.5625 ,129.3750, 132.1875,

135.0000, 137.8125, 140.6250 ,143.4375, 146.2500 ,149.0625, 151.8750 ,154.6875,

157.5000, 160.3125, 163.1250, 165.9375, 168.7500, 171.5625 ,174.3750, 177.1875,

180.0000, 182.8125, 185.6250 ,188.4375, 191.2500, 194.0625, 196.8750, 199.6875,

202.5000, 205.3125, 208.1250, 210.9375, 213.7500 ,216.5625, 219.3750 ,222.1875,

225.0000, 227.8125, 230.6250 ,233.4375, 236.2500, 239.0625, 241.8750, 244.6875,

247.5000, 250.3125, 253.1250, 255.9375, 258.7500, 261.5625, 264.3750, 267.1875,

270.0000, 272.8125, 275.6250, 278.4375, 281.2500 ,284.0625 ,286.8750, 289.6875,

292.5000, 295.3125, 298.1250, 300.9375, 303.7500 ,306.5625, 309.3750, 312.1875,

315.0000, 317.8125, 320.6250, 323.4375, 326.2500, 329.0625 ,331.8750, 334.6875,

337.5000, 340.3125, 343.1250, 345.9375, 348.7500, 351.5625 ,354.3750 ,357.1875]

#lns=

#print (lts)

#print (lns)

#print (len(lns),len(lts))

#quit(-1)

ly2=len(lts)

lx2=len(lns)

shapex=lx2

shapey=ly2

#print("sheip")

#print(shapex, shapey)

lons, lats = np.meshgrid(lns,lts)

new_W, new_H = (shapey,shapex)

xrange = lambda x: np.linspace(0, 360, x)

f2 = interp2d(xrange(indimx), xrange(indimy), dem2, kind="linear")

demo = f2(xrange(shapex), xrange(shapey))

f3 = interp2d(xrange(indimx), xrange(indimy), dem2, kind="linear")

topo=demo

savenetcdf_single_frommem(outfilename1, "z", topo,lts,lns)

return(topo,lons,lats)

- exoplasim ,,,

def run_exoplasim_z(a_input_dem1, a_gridtype, a_layers, a_years,a_timestep,a_snapshots,a_ncpus,a_eccentricity,a_obliquity,a_lonvernaleq,a_pCO2):

#output_format=".npz"

output_format=".nc"

a_pO2=1-a_pCO2-0.79

a_pN2=(1-0.21-a_pCO2)

print("Precess input grid, to type ",a_gridtype)

if(a_gridtype=="T21"):

print("T21")

topo, lons, lats=convert_to_t21(a_input_dem1,"demT21.nc")

if(a_gridtype=="T42"):

print("T42")

topo, lons, lats=convert_to_t42(a_input_dem1, "demT42.nc")

create_sras(topo)

print("Creating exoplasim object ")

#testplanet= exo.Model(workdir="testplanet_run",modelname="TESTPLANET",ncpus=a_ncpus,resolution="T21")

#testplanet= exo.Earthlike(workdir="planet_run",modelname="PLANET",ncpus=a_ncpus,resolution="T21",outputtype=output_format, crashtolerant=True)

testplanet= exo.Earthlike(workdir="planet_run",modelname="PLANET",ncpus=a_ncpus,resolution=a_gridtype,layers=a_layers, outputtype=output_format, crashtolerant=True)

## earth 21000 BP

glaciers1= {

"toggle": True,

"mindepth":2,

"initialh":-1

}

testplanet.configure(

startemp=5772.0,

flux=1367,# Stellar parameters

eccentricity=a_eccentricity,

obliquity=a_obliquity,

lonvernaleq=a_lonvernaleq,

fixedorbit=True, # Orbital parameters

rotationperiod=1, # Rotation

topomap="topo.sra",

landmap="landmask.sra",

radius=1.0,

gravity=9.80665, # Bulk properties

#seaice=False,

#maxsnow=False,

#glaciers=False,

#stormclim=False,

#vegetation=0,

wetsoil=True, #alters albedo of soil based on how wet it is

vegetation=2, #toggles vegetation module; 1 for static vegetation, 2 to allow growth

vegaccel=1,

seaice=True,

maxsnow=1,

glaciers=glaciers1,

#stormclim=False,

#vegetation=0,

pN2=a_pN2,

pCO2=a_pCO2,

pO2=a_pO2,

ozone=True, # Atmosphere

timestep=a_timestep,

snapshots=0, ## jos a_snapshots, vie muistia!

#wetsoil=True,

physicsfilter="gp|exp|sp") # Model dynamics

testplanet.exportcfg()

print("Running ExoPlasim ... ")

#testplanet.run(years=a_years,crashifbroken=True)

testplanet.run(years=a_years,crashifbroken=True)

runc=1

#model.run(years=runsteps1, crashifbroken=True)

savename = 'planet_run_'+str(runc1)

testplanet.finalize(savename,allyears=False,clean=False,keeprestarts=True)

testplanet.save(savename)

#testplanet.cfgpostprocessor(times=12) #number of output times (months) in the output files

#model.exportcfg()

#ts = model.getbalance(key='ts')

#savename = 'model_run_'+str(runc1)

#model.finalize(savename,allyears=False,clean=False,keeprestarts=True)

#model.save(savename)

#level = max(10e-6, level) #sets the minimum CO2 level

#model.modify(pCO2=level)

#model.runtobalance(baseline=10, maxyears=1000, minyears=10, timelimit=480, crashifbroken=True clean=True)

#model.finalize('model_final',allyears=False,clean=False,keeprestarts=True)

#model.save('model_final')

return(0)

print("Exoplasim run OK.")

lon = testplanet.inspect("lon")

lat = testplanet.inspect("lat")

ts = testplanet.inspect("ts",tavg=True)

tsc=ts-273.15

#im=plt.pcolormesh(lon,lat,ts,cmap="RdBu_r",vmin=273.15-60.0,vmax=273.15+60.0,shading="Gouraud")

im=plt.pcolormesh(lon,lat,tsc,cmap="RdBu_r",vmin=-60.0,vmax=60.0,shading="Gouraud")

#plt.contour(lon,lat,ts,[273.15,],colors=['gray',])

#plt.contour(lon,lat,tsc,[-30,-20,-10,0,10,20,30],colors=['gray',])

#plt.contour(lon,lat,tsc,[0,5,10,15,20,25,30],colors=['blue',]

plt.contour(lon,lat,tsc,[0,5,10,15,20,25,30,35], color="red")

plt.contour(lon,lat,tsc,[0,-5,-10,-15,-20,-25,-30,-35], color="blue")

plt.colorbar(im,label="Surface Temperature [K]")

plt.xlabel("Longitude [deg]")

plt.ylabel("Latitude [deg]")

plt.title("Testplanet Surface Temperature")

plt.show()

return(0)

-

-

print(" Exoplasim simulation code ---")

- jn warning maybe nok

- input_dem='./indata/indem.nc'

- input_dem='./indata/Map22_PALEOMAP_1deg_Mid-Cretaceous_95Ma.nc'

- input_dem='./indata/Map14_PALEOMAP_1deg_Paleocene_Eocene_Boundary_55Ma.nc'

- input_dem='/indata/Map13_PALEOMAP_1deg_Early_Eocene_50Ma.nc'

- input_dem='./indata/Map12_PALEOMAP_1deg_early_Middle_Eocene_45Ma.nc'

- input_dem='./indata/Map18_PALEOMAP_1deg_Late_Cretaceous_75Ma.nc' ## OK

- input_dem='./indata/Map20_PALEOMAP_1deg_Late_Cretaceous_85Ma.nc' ## nok

- input_dem='./indata/Map24_PALEOMAP_1deg_Early Cretaceous_105Ma.nc' ## nok

- input_dem='./indata/Map17_PALEOMAP_1deg_Late_Cretaceous_70Ma.nc' ##nok

- input_dem='./indata/Map19_PALEOMAP_1deg_Late_Cretaceous_80Ma.nc'

- input_dem='./indata/Map19_PALEOMAP_1deg_Late_Cretaceous_80Ma.nc' ## OK

input_dem='./indata/Map21_PALEOMAP_1deg_Mid-Cretaceous_90Ma.nc' #90ma

- input_dem='./indata/Map49_PALEOMAP_1deg_Permo-Triassic Boundary_250Ma.nc' # PT raja co2 1600. jopa 3000-4000

- input_dem='./indata/Map57_PALEOMAP_1deg_Late_Pennsylvanian_300Ma.nc' ## Late Pennsylcanian ice, co2 200? 250?

- input_dem="./indata/Map56_PALEOMAP_1deg_Early_Permian_295Ma.nc"

- indatafile1='./indata/TOPicemsk.GLACD26kN9894GE90227A6005GGrBgic.nc'

- input_dem="origodem.nc"

- a_yr=14500

- load_glac1d_dem(indatafile1, input_dem, 14500)

- input one de scotese palaeomap dem!

- def convert_to_t42(infilename1, outfilename1):

- topo, lons, lats=convert_to_t21(input_dem, "demT21.nc")

- topo, lons, lats=convert_to_t42(input_dem, "demT42.nc")

- plt.imshow(topo,cmap='gist_earth')

- plt.show()

a_modelname1="planet"

a_workdir1="planet_run"

a_runsteps1=21

a_years1=a_runsteps1

a_timestep1=30

a_snapshots1=0

a_ncpus1=4

a_layers1=8

a_outputtype1=".nc"

- a_resolution1="T42"

a_resolution1="T21"

a_precision1=4

a_crashtolerant1=True

a_landmap1="landmask.sra"

a_topomap1="topo.sra"

- nowadays ca 0 BP

- a_eccentricity1=0.01671022

- a_obliquity1=23.44

- a_lonvernaleq1=102.7

- a_pCO21=360

- cretaceous

a_eccentricity1=0.0167022

a_obliquity1=23.441

a_lonvernaleq1=102.7

a_pCO21=900.0e-6

- early permian 295 ma

- late pennsylvanian 300 ma

- a_eccentricity1=0.01671022

- a_obliquity1=23.441

- a_lonvernaleq1=102.7

- a_pCO2=250.0e-6 ## ca 200 - 250 ppmvol

- a_pCO21=180.0e-6

- a_pCO21=100.0e-6

- permo-triassic boundary ca 250 ma

- a_eccentricity1=0.01671022

- a_obliquity1=23.441

- a_lonvernaleq1=102.7

- a_pCO21=1600.0e-6 ## cal1600 ppmvol 3000 ? 2000-4000

- 14500 yrs ago

- a_eccentricity1=0.019595

- a_obliquity1=23.6801

- a_lonvernaleq1=10

- a_pCO21=210e-6

print("Exoplasim ...")

- run_exoplasim_y(a_workdir1, a_modelname1, a_outputtype1, a_resolution1, a_layers1, a_ncpus1, a_precision1, a_crashtolerant1,a_timestep1,a_runsteps1,a_landmap1, a_topomap1,a_eccentricity1,a_obliquity1, a_lonvernaleq1,a_pCO21)

run_exoplasim_z(input_dem, a_resolution1, a_layers1, a_years1,a_timestep1,a_snapshots1,a_ncpus1,a_eccentricity1,a_obliquity1,a_lonvernaleq1,a_pCO21)

print(".")

- process dem file to mask

- and flatten sea

library(raster)

library(ncdf4)

library(rgdal)

library(png)

file1="./indata/Map19_PALEOMAP_1deg_Late_Cretaceous_80Ma.nc"

file2="dem.nc"

file3="dem.tif"

ur1<-raster(file1)

ur1[ur1[]<1] <- 0

- image(ur1)

- plot(ur1)

lonr1 <- init(ur1, 'x')

latr1 <- init(ur1, 'y')

crs(ur1)<-"+proj=longlat +datum=WGS84 +no_defs +ellps=WGS84 +towgs84=0,0,0"

writeRaster(ur1, file2, overwrite=TRUE, format="CDF", varname="Band1", varunit="m",

longname="Band1", xname="lon", yname="lat")

writeRaster(ur1, file3, overwrite=TRUE, format="GTiff", varname="Band1", varunit="m",

longname="Band1", xname="lon", yname="lat")

crs(lonr1)<-"+proj=longlat +datum=WGS84 +no_defs +ellps=WGS84 +towgs84=0,0,0"

crs(latr1)<-"+proj=longlat +datum=WGS84 +no_defs +ellps=WGS84 +towgs84=0,0,0"

writeRaster(lonr1, "lons.nc", overwrite=TRUE, format="CDF", varname="Band1", varunit="deg",

longname="Band1", xname="lon", yname="lat")

writeRaster(latr1, "lats.nc", overwrite=TRUE, format="CDF", varname="Band1", varunit="deg",

longname="Band1", xname="lon", yname="lat")

r=ur1

dims<-dim(r)

dims

r[r[]<1] <- 0

r[r[]>0] <- 1

image(r)

- stop(-1)

print (dims[1])

print (dims[2])

rows=dims[2]

cols=dims[1]

- stop(-1)

mask0<-r

mask1<-mask0[]

mask2<-matrix(mask1, ncol=cols, nrow=rows )

mask3<-t(mask2)

r <- writePNG(mask3, "test.png")

plot(r)

- png('mask.png', height=nrow(r), width=ncol(r))

- plot(r, maxpixels=ncell(r))

- image(r, axes = FALSE, labels=FALSE)

- dev.off()

Capture temperature slice

-

- exoplasim output netcdf post-process

- capture slices, maybe annual mean

-

- 0000.0002 27.12.2021

library(raster)

library(ncdf4)

library(rgdal)

get_t21_nc_variable<-function(filename1, variable1, start1, count1)

{

nc1=nc_open(filename1)

ncdata0 <- ncvar_get( nc1, variable1, start=c(1,1, start1), count=c(64,32,count1) )

lons1<- ncvar_get( nc1, "lon")

lats1<- ncvar_get( nc1, "lat")

nc_close(nc1)

return(ncdata0)

}

monthlymean<-function(daata)

{

daataa=rowSums(daata, dims=2)/12

return(daataa)

}

save_t21_ast_georaster_nc<-function(outname1,daataa, outvar1, longvar1, unit1)

{

## note warning: mayne not accurate!

ext2<-c(-180, 180, -90,90)

daataa2<-t(daataa)

r <- raster(daataa2)

extent(r) <- ext2

crs(r)<-"+proj=longlat +datum=WGS84 +no_defs +ellps=WGS84 +towgs84=0,0,0"

writeRaster(r, outname1, overwrite=TRUE, format="CDF", varname=outvar1, varunit=unit1,

longname=longvar1, xname="lon", yname="lat")

}

capture_mean_variable<-function(infile1, outfile1, start1, count1, variable1, unit1, ofset1, coef1)

{

udata00<-get_t21_nc_variable(file1, variable1, start1, count1)

udata01=monthlymean(udata00)

udata02=((udata01+ofset1)*coef1)

#image(udata02)

mean1=mean(udata02)

print(mean1)

outname1=outfile1

outvar1=variable1

longvar1=variable1

unit1=unit1

save_t21_ast_georaster_nc(outname1,udata02, outvar1, longvar1, unit1)

}

capture_slice_variable<-function(infile1, outfile1, start1, variable1, unit1, ofset1, coef1)

{

udata01<-get_t21_nc_variable(file1, variable1, start1, 1)

#udata01=monthlymean(udata00)

udata02=((udata01+ofset1)*coef1)

#image(udata02)

mean1=mean(udata02)

print(mean1)

outname1=outfile1

outvar1=variable1

longvar1=variable1

unit1=unit1

save_t21_ast_georaster_nc(outname1,udata02, outvar1, longvar1, unit1)

}

- file1="E:/varasto_plasim/CRETACEOUS_90MA_900CO2_BASIC_NOICE_NOWETSOIL/MOST_SNAP.00015.nc"

file1="E:/varasto_plasim/cretaceous_90ma_t21_1/MOST_SNAP.00021.nc"

- file1="E:/varasto_plasim/penssylvania_permi_250mya_co2_250ppm_1/MOST_SNAP.00015.nc"

- file1="E:/varasto_plasim/penssylvania_permian_300ma_co2_50ppm_2/MOST_SNAP.00100.nc"

- file1="./koppenpasta/creta90.nc"

- file1="./koppenpasta/sand20.nc"

- file1="E:/varasto_simutulos_2/kgp2a3_ok/2/MOST.00010.nc"

variable1="tsa"

start1=1

count1=12

outfile1="tsa_mean.nc"

unit1="C"

ofset1=-273.15

coef1=1

capture_mean_variable(infile1, outfile1, start1, count1, variable1, unit1, ofset1, coef1)

variable1="tsa"

start1=1

outfile1="tsa_1.nc"

unit1="C"

ofset1=-273.15

coef1=1

capture_slice_variable(infile1, outfile1, start1, variable1, unit1, ofset1, coef1)

variable1="tsa"

start1=7

outfile1="tsa_7.nc"

unit1="C"

ofset1=-273.15

coef1=1

capture_slice_variable(infile1, outfile1, start1, variable1, unit1, ofset1, coef1)

variable1="icez"

start1=1

count1=12

outfile1="icez_mean.nc"

unit1="m2s2"

ofset1=0

coef1=1

capture_mean_variable(infile1, outfile1, start1, count1, variable1, unit1, ofset1, coef1)

variable1="pr"

start1=1

count1=12

outfile1="pr_mean.nc"

unit1="mm"

ofset1=0

coef1=1000*3600*24*360

capture_mean_variable(infile1, outfile1, start1, count1, variable1, unit1, ofset1, coef1)

variable1="veggpp"

start1=1

count1=12

outfile1="veggpp_mean.nc"

unit1="kg/m2/ha"

ofset1=0

coef1=3600*24*360*100*100

capture_mean_variable(infile1, outfile1, start1, count1, variable1, unit1, ofset1, coef1)

- stop(-1)

Downscale w/R

- "R" temperature downscaler

library(raster)

library(ncdf4)

library(rgdal)

library(viridis)

downscale_temperature_65 <- function (coarse_rastera, fine_rastera)

{

## methods: 0 delta, 1 spatialeco, 2 dissever, 3 temperature lapse 6.5 C/1 km lm

print ("Downscaling temperature, 6.5 C/1 km")

coarse_raster<-coarse_rastera

fine_raster<-fine_rastera

p1<-fine_raster

p2<-fine_raster

plot(fine_raster)

plot(coarse_raster, col=viridis(200))

coarseoro<- resample(p1, coarse_raster)

coarseoro_big<-resample(coarseoro, p1)

orodelta<-(p1-coarseoro_big)

baset1 <- resample(coarse_raster, p1)

raster_stack <- stack(p1,p2)

orotemp<-orodelta*0.0065*-1

coarseorotemp<- resample(orotemp, coarse_raster)

coarseorotemp_big<-resample(coarseorotemp, p1)

orotempdelta<-orotemp-coarseorotemp_big

outtemp<-baset1+orotempdelta

#outtempr<-rotate(outtemp)

#plot(outtempr)

return(outtemp)

}

coarsename1<-"tsa_mean.nc"

- filename1<-"./indata1/Map57_PALEOMAP_6min_Late_Pennsylvanian_300Ma.nc"

- filename1="./indata/Map16_PALEOMAP_6min_KT_Boundary_65Ma.nc"

filename1="E:/varasto_paleodem/Scotese_Wright_2018_Maps_1-88_6minX6min_PaleoDEMS_nc/Map21_PALEOMAP_6min_Mid-Cretaceous_90Ma.nc"

coarser1<-raster(coarsename1)

finer1<-raster(filename1)

finer2<-finer1

finer2[finer2<0.0]<-0.0

rout1<-downscale_temperature_65(coarser1, finer2)

ext1<-extent(-180,180,-90,90)

crs1<-"+proj=longlat +datum=WGS84 +no_defs +ellps=WGS84 +towgs84=0,0,0"

crs(rout1)<-crs1

extent(rout1 )<-ext1

image(rout1)

writeRaster(rout1, "temp_dskaled1.nc", overwrite=TRUE, format="CDF", varname="tsa", varunit="degC",

longname="tsa", xname="lon", yname="lat")

Downscale output data w/python

-

- netcdf downscaler

- also habitat test

- python 3,GDAL

-

- v 0012.0003

- 26.12.2021

-

import numpy as np

import scipy

import pandas as pd

import matplotlib.pyplot as plt

import matplotlib.image as mpimg

from matplotlib import cm

from colorspacious import cspace_converter

from collections import OrderedDict

- import colormaps as cmaps

- import cmaps

import netCDF4 as nc

import os

from scipy.interpolate import Rbf

from sklearn.linear_model import LinearRegression

from sklearn.preprocessing import PolynomialFeatures

from sklearn.pipeline import make_pipeline

from sklearn.ensemble import RandomForestRegressor

from sklearn.preprocessing import StandardScaler

from sklearn.model_selection import train_test_split

from sklearn import svm, metrics

from pygam import LogisticGAM

from pygam import LinearGAM

import pandas as pd

import array as arr

import scipy.stats

- if basemap is available, we'll use it.

- otherwise, we'll improvise later...

try:

from mpl_toolkits.basemap import Basemap

basemap = True

except ImportError:

basemap = False

- control vars

- random fotest

RF_estimators1=10

RF_features1=2

- downscaler

DS_method=0 ## default random forest

DS_show=1 ## view downscaled

DS_input_log=0 ## convert "b" var to log during downscaling

cache_lons1=[]

cache_lats1=[]

cache_x=[]

cache_y=[]

cache_z=[]

cache_x2=[]

cache_y2=[]

cache_z2=[]

def probability_single_var( x1, y1, x2):

print ("Specie habitation test")

xa1=np.array(x1)

ya1=np.array(y1)

xa2=np.array(x2)

sha1=np.shape(x1)

dim2=1

x=xa1.reshape((-1, dim2))

y=ya1.reshape((-1, 1))

xb=xa2.reshape((-1, dim2))

#y=y*0.0+1

y=x

#print(x)

#print(y)

x_mean=np.mean(x)

x_std=np.std(x)

x_cover_std=(x-x_mean)/x_std

y_mean=np.mean(y)

y_std=np.std(y)

y_cover_std=(y-y_mean)/y_std

kohde=abs(x-x_mean)/x_std

#print (kohde)

#print (x-x_mean)

#quit()

#plt.plot(x_cover_std)

#plt.show()

#model = LinearRegression().fit(x, kohde)

#degree=3

#polyreg=make_pipeline(PolynomialFeatures(degree),LinearRegression())

#model=polyreg.fit(x,kohde)

sc = StandardScaler()

xa = sc.fit_transform(x)

xba = sc.transform(xb)

#model = RandomForestRegressor(n_estimators=10, max_features=2).fit(xa,kohde)

#model = LogisticGAM().fit(x, kohde)

model = LinearGAM().fit(x, kohde)

y2= model.predict(xb)

#svm1 = svm.OneClassSVM(nu=0.1, kernel="rbf", gamma=0.5)

#svm1 = svm.OneClassSVM(nu=0.1, kernel="rbf", gamma=0.5)

#model=svm1.fit(x,y)

#model=svm1.fit(x,kohde)

#y2= model.predict(xb)

y2_mean=np.mean(y2)

y2_std=np.std(y2)

y2_cover_std=(y2-y2_mean)/y2_std

y3=y2_cover_std

#y5=scipy.stats.norm.cdf(y3,y2_mean,y2_std)

y4=scipy.stats.norm.sf(y3,y2_mean,y2_std)

ymin=np.min(y4)

deltamax=np.max(y4)-np.min(y4)

y5=((y4-ymin)/deltamax)

#zgrid1=np.array(zoutvalue1).reshape(1000, 400)

#plt.plot(y5)

#cb = plt.scatter(np.arange(1,1000), np.arange(1,400), s=60, c=zoutvalue1)

#plt.show()

#print(np.shape(y5[0]))

#stop(-1)

return(y5)

def load_xy(infname1, lonname1, latname1):

global cache_lons1

global cache_lats1

global cache_x

global cache_y

global cache_z

global cache_x2

global cache_y2

global cache_z2

indf1=pd.read_csv(infname1, sep=';')

#print(indf1)

templons1=indf1[lonname1]

templats1=indf1[latname1]

cache_lons1=np.array(templons1)

cache_lats1=np.array(templats1)

#print (cache_lons1)

#print (cache_lats1)

return(0)

def preprocess_big_raster(infilename1, invarname1, sabluname1, outfilename1, soutfilename1, outvarname1,lon1, lon2, lat1, lat2, width1, height1, roto):

gdal_cut_resize_fromnc_tonc(infilename1, sabluname1, outfilename1, invarname1,lon1, lon2, lat1, lat2)

gdal_cut_resize_tonc(infilename1,soutfilename1 ,lon1, lon2, lat1, lat2, width1, height1)

return(0)

def preprocess_small_single_raster(infilename1, invarname1, outfilename1, outvarname1,lon1, lon2, lat1, lat2, roto):

print(infilename1)

tempfilename1="./process/temp00.nc"

tempfilename2="./process/temp01.nc"

loadnetcdf_single_tofile(infilename1, invarname1, tempfilename1, outvarname1)

#rotate_netcdf_360_to_180(tempfilename1, outvarname1,tempfilename2, outvarname1)

gdal_cut_tonc(tempfilename1,outfilename1 ,lon1, lon2, lat1, lat2)

return(0)

def preprocess_small_timed_raster(infilename1, invarname1, intime1, outfilename1, outvarname1,lon1, lon2, lat1, lat2, roto):

tempfilename1="./process/temp00.nc"

tempfilename2="./process/temp01.nc"

loadnetcdf_timed_tofile(infilename1, invarname1, intime1, tempfilename1, outvarname1)

rotate_netcdf_360_to_180(tempfilename1, outvarname1,tempfilename2, outvarname1)

gdal_cut_tonc(tempfilename2,outfilename1 ,lon1, lon2, lat1, lat2)

return(0)

def rotate_netcdf_360_to_180(infilename1, invarname1,outfilename1, outvarname1):

global cache_lons1

global cache_lats1

global cache_x

global cache_y

global cache_z

pointsx1=[]

pointsy1=[]

gdal_get_nc_to_xyz_in_mem(infilename1, invarname1 )

lonsa=cache_lons1

latsa=cache_lats1

pointsz1=np.array(cache_z)

pointsx1=np.array(cache_x)

pointsy1=np.array(cache_y)

nlatsa1=len(latsa)

nlonsa1=len(lonsa)

pointsz3=np.reshape(pointsz1, (nlatsa1, nlonsa1))

rama1=int(len(lonsa)/2)

pointsz4=np.roll(pointsz3,rama1,axis=1)

lonsb=lonsa-180

save_points_to_netcdf(outfilename1, outvarname1, lonsb, latsa, pointsz4)

return(0)

def gdal_get_nc_to_xyz_in_mem(inname1, invar1):

global cache_lons1

global cache_lats1

global cache_x

global cache_y

global cache_z

global cache_x2

global cache_y2

global cache_z2

imga=loadnetcdf_single_tomem(inname1, invar1)

#plt.imshow(imga)

lonsa=cache_lons1

latsa=cache_lats1

cache_lons1=[]

cache_lats1=[]

cache_x=[]

cache_y=[]

cache_z=[]

cache_x2=[]

cache_y2=[]

cache_z2=[]

dim1=imga.shape

nlat1=len(latsa)

nlon1=len(lonsa)

#plt.plot(imga)

#plt.show()

#print(nlat1)

#print(nlon1)

#quit(-1)

#print(inname1)

#print (nlat1)

#quit(-1)

for iy in range(0,nlat1):

for ix in range(0,nlon1):

pz1=imga[iy,ix]

if (str(pz1) == '--'):

cache_x.append(lonsa[ix])

cache_y.append(latsa[iy])

cache_z.append(0)

else:

cache_x.append(lonsa[ix])

cache_y.append(latsa[iy])

cache_z.append(pz1)

#print(cache_z)

cache_lons1=lonsa

cache_lats1=latsa

#print (pz1)

return (cache_z)

def average_tables(daata1, daata2):

daata3=daata1

pitu=len(daata1)

for n in range(0,pitu):

daata3[n]=(daata1[n]+daata2[n])/2

return(daata3)

def add_scalar(daata, skalar):

pitu=len(daata)

for n in range(0,pitu):

daata[n]=daata[n]+skalar

return(daata)

def gam_multiple_vars( x1, y1, x2):

print ("GAM")

xa1=np.array(x1)

ya1=np.array(y1)

xa2=np.array(x2)

#print (xa1)

- quit(-1)

sha1=np.shape(x1)

dim2=sha1[1]

x=xa1.reshape((-1, dim2))

y=ya1.reshape((-1, 1))

xb=xa2.reshape((-1, dim2))

#sc = StandardScaler()

#xa = sc.fit_transform(x)

#xba = sc.transform(xb)

#model = LogisticGAM().fit(x, y)

model = LinearGAM().fit(x, y)

y2= model.predict(xb)

return(y2)

def random_forest_multiple_vars( x1, y1, x2):

print ("RF")

global RF_estimators1

global RF_features1

print(RF_estimators1, RF_features1)

#quit(-1)

xa1=np.array(x1)

ya1=np.array(y1)

xa2=np.array(x2)

#print (xa1)

- quit(-1)

sha1=np.shape(x1)

dim2=sha1[1]

x=xa1.reshape((-1, dim2))

y=ya1.reshape((-1, 1))

xb=xa2.reshape((-1, dim2))

#model = LinearRegression().fit(x, y)

#degree=3

#polyreg=make_pipeline(PolynomialFeatures(degree),LinearRegression())

#model=polyreg.fit(x,y)

sc = StandardScaler()

xa = sc.fit_transform(x)

xba = sc.transform(xb)

## orig

##model = RandomForestRegressor(n_estimators=10, max_features=2).fit(xa,y)

model = RandomForestRegressor(n_estimators=RF_estimators1, max_features=RF_features1).fit(xa,y)

y2= model.predict(xba)

return(y2)

def save_points_toxyz(filename, x,y,z):

print("Dummy function only")

return(0)

def linear_regression_multiple_vars( x1, y1, x2):

print ("MLR")

xa1=np.array(x1)

ya1=np.array(y1)

xa2=np.array(x2)

sha1=np.shape(x1)

dim2=sha1[1]

x=xa1.reshape((-1, dim2))

y=ya1.reshape((-1, 1))

xb=xa2.reshape((-1, dim2))

#model = LinearRegression().fit(x, y)

degree=3

polyreg=make_pipeline(PolynomialFeatures(degree),LinearRegression())

model=polyreg.fit(x,y)

y2= model.predict(xb)

return(y2)

def linear_regression_singe_var( x1, y1, x2):

#print (x1)

#print(y1)

#return(0)

#xa1=np.array(x1)

#ya1=np.array(y1)

#xa2=np.array(x2)

xa1=np.array( x1)

ya1=np.array(y1)

xa2=np.array(x2)

sha1=np.shape(x1)

dim2=sha1[1]

x=xa1.reshape((-1, dim2))

y=ya1.reshape((-1, 1))

xb=xa2.reshape((-1, dim2))

#x=xa1.reshape((-1, 1))

#y=ya1.reshape((-1, 1))

#xb=xa2.reshape((-1, 1))

#print (x)

#print (y)

model = LinearRegression().fit(x, y)

#model = RandomForestRegressor(n_estimators=10, max_features=2).fit(x,y)

#degree=2

#polyreg=make_pipeline(PolynomialFeatures(degree),LinearRegression())

#polyreg=make_pipeline(PolynomialFeatures(degree), )

#model=polyreg.fit(x,y)

## warning not xb

y2= model.predict(xb)

#print(y2)

#print("LR")

return(y2)

def save_points_to_netcdf(outfilename1, outvarname1, xoutlons1, xoutlats1, zoutvalue1):

nlat1=len(xoutlats1)

nlon1=len(xoutlons1)

#print ("Save ...")

#print (nlat1)

#print (nlon1)

zoutvalue2=np.array(zoutvalue1).reshape(nlat1, nlon1)

#indata_set1=indata1

ncout1 = nc.Dataset(outfilename1, 'w', format='NETCDF4')

outlat1 = ncout1.createDimension('lat', nlat1)

outlon1 = ncout1.createDimension('lon', nlon1)

outlats1 = ncout1.createVariable('lat', 'f4', ('lat',))

outlons1 = ncout1.createVariable('lon', 'f4', ('lon',))

outvalue1 = ncout1.createVariable(outvarname1, 'f4', ('lat', 'lon',))

outvalue1.units = 'Unknown'

outlats1[:] = xoutlats1

outlons1[:] = xoutlons1

outvalue1[:, :] =zoutvalue2[:]

ncout1.close()

return 0

def gdal_cut_resize_fromnc_tonc(inname1, inname2, outname2, invar2,lon1, lon2, lat1, lat2):

imga=loadnetcdf_single_tomem(inname2, invar2)

dim1=imga.shape

height1=dim1[0]

width1=dim1[1]

print (width1)

print (height1)

jono1="gdalwarp -te "+str(lon1)+" "+str(lat1)+" "+str(lon2)+" "+str(lat2)+" "+"-ts "+str(width1)+" "+str(height1)+ " " +inname1+" "+outname2

print(jono1)

os.system(jono1)

return

def gdal_get_points_from_file(inname1, invar1,pointsx1,pointsy1):

global cache_lons1

global cache_lats1

global cache_x

global cache_y

global cache_z

global cache_x2

global cache_y2

global cache_z2

imga=loadnetcdf_single_tomem(inname1, invar1)

#plt.imshow(imga)

lonsa=cache_lons1

latsa=cache_lats1

cache_lons1=[]

cache_lats1=[]

cache_x=[]

cache_y=[]

cache_z=[]

cache_x2=[]

cache_y2=[]

cache_z2=[]

dim1=imga.shape

nlat1=dim1[0]

nlon1=dim1[1]

pitu=len(pointsx1)

#px1=10

#py1=45

for n in range(0,pitu):

px1=pointsx1[n]

py1=pointsy1[n]

#print('.')

for iy in range(0,nlat1):

if(py1>=latsa[iy]):

for ix in range(0,nlon1):

if(px1>=lonsa[ix]):

pz1=imga[iy,ix]

cache_x.append(lonsa[ix])

cache_y.append(latsa[iy])

cache_z.append(pz1)

#print(cache_z)

cache_lons1=lonsa

cache_lats1=latsa

#print (pz1)

return (cache_z)

def gdal_cut_resize_tonc(inname1, outname1, lon1, lon2, lat1, lat2, width1, height1):

#gdalwarp -te -5 41 10 51 -ts 1000 0 input.tif output.tif

jono1="gdalwarp -of netcdf -te "+str(lon1)+" "+str(lat1)+" "+str(lon2)+" "+str(lat2)+" "+"-ts "+str(width1)+" "+str(height1)+ " " +inname1+" "+outname1

print(jono1)

os.system(jono1)

return

def interpolate_cache(x_min, y_min, x_max, y_max, reso1):

global cache_lons1

global cache_lats1

global cache_x

global cache_y

global cache_z

global cache_x2

global cache_y2

global cache_z2

cache_x2=[]

cache_y2=[]

cache_z2=[]

cache_lons1=[]

cache_lats1=[]

pitu1=len(cache_z)

raja1=pitu1

for i in range(0,raja1):

#print (i)

#print (cache_z[i])

try:

xx=cache_x[i]

yy=cache_y[i]

zz=cache_z[i]

if (str(zz) == '--'):

raja1=raja1-1

#print (zz)

#print(".")

else:

cache_x2.append(xx)

cache_y2.append(yy)

cache_z2.append(zz)

except IndexError:

print("BRK")

break

lonsn=(int(x_max-x_min)/reso1)

latsn=(int(y_max-y_min)/reso1)

cache_lons1=np.linspace(x_min, x_max, lonsn)

cache_lats1=np.linspace(y_min, y_max, latsn)

#print (cache_z2)

#print (cache_x2)

#exit(-1)

grid_x, grid_y = np.mgrid[x_min:x_max:reso1, y_min:y_max:reso1]

rbfi = Rbf(cache_x2, cache_y2, cache_z2)

di = rbfi(grid_x, grid_y)

#plt.figure(figsize=(15, 15))

#plt.imshow(di.T, origin="lower")

#cb = plt.scatter(df.x, df.y, s=60, c=df.por, edgecolor='#ffffff66')

#plt.colorbar(cb, shrink=0.67)

#plt.show()

return(di.T)

def makepoints(imgin1, lonsin1, latsin1):

global cache_x

global cache_y

global cache_z

cache_x=[]

cache_y=[]

cache_z=[]

dim1=imgin1.shape

nlat1=dim1[0]

nlon1=dim1[1]

k=0

for iy in range(0,nlat1):

for ix in range(0,nlon1):

zz=imgin1[iy,ix]

cache_x.append(lonsin1[ix])

cache_y.append(latsin1[iy])

if (str(zz) == '--'):

## warning there 0 append to equalize grid

cache_z.append(0)

k=1

else:

cache_z.append(zz)

#cache_z.append(imgin1[iy,ix])

return(0)

def gdal_reso(inname1, outname1, reso1):

jono1="gdalwarp -tr "+str(reso1)+" "+str(reso1)+" "+inname1+" "+outname1

os.system(jono1)

return(0)

def gdal_cut_tonc(inname1, outname1, lon1, lon2, lat1, lat2):

jono1="gdalwarp -te "+str(lon1)+" "+str(lat1)+" "+str(lon2)+" "+str(lat2)+" "+inname1+" "+outname1

print(jono1)

os.system(jono1)

return(0)

def savenetcdf_single_frommem(outfilename1, outvarname1, xoutvalue1,xoutlats1,xoutlons1):

nlat1=len(xoutlats1)

nlon1=len(xoutlons1)

#indata_set1=indata1

ncout1 = nc.Dataset(outfilename1, 'w', format='NETCDF4')

outlat1 = ncout1.createDimension('lat', nlat1)

outlon1 = ncout1.createDimension('lon', nlon1)

outlats1 = ncout1.createVariable('lat', 'f4', ('lat',))

outlons1 = ncout1.createVariable('lon', 'f4', ('lon',))

outvalue1 = ncout1.createVariable(outvarname1, 'f4', ('lat', 'lon',))

outvalue1.units = 'Unknown'

outlats1[:] = xoutlats1

outlons1[:] = xoutlons1

outvalue1[:, :] =xoutvalue1[:]

ncout1.close()

return 0

def loadnetcdf_single_tomem(infilename1, invarname1):

global cache_lons1

global cache_lats1

print(infilename1)

inc1 = nc.Dataset(infilename1)

inlatname1="lat"

inlonname1="lon"

inlats1=inc1[inlatname1][:]

inlons1=inc1[inlonname1][:]

cache_lons1=inlons1

cache_lats1=inlats1

indata1_set1 = inc1[invarname1][:]

dim1=indata1_set1.shape

nlat1=dim1[0]

nlon1=dim1[1]

inc1.close()

return (indata1_set1)

def loadnetcdf_single_tofile(infilename1, invarname1, outfilename1, outvarname1):

inc1 = nc.Dataset(infilename1)

inlatname1="lat"

inlonname1="lon"

inlats1=inc1[inlatname1][:]

inlons1=inc1[inlonname1][:]

indata1_set1 = inc1[invarname1][:]

dim1=indata1_set1.shape

nlat1=dim1[0]

nlon1=dim1[1]

#indata_set1=indata1

ncout1 = nc.Dataset(outfilename1, 'w', format='NETCDF4')

outlat1 = ncout1.createDimension('lat', nlat1)

outlon1 = ncout1.createDimension('lon', nlon1)

outlats1 = ncout1.createVariable('lat', 'f4', ('lat',))

outlons1 = ncout1.createVariable('lon', 'f4', ('lon',))

outvalue1 = ncout1.createVariable(outvarname1, 'f4', ('lat', 'lon',))

outvalue1.units = 'Unknown'

outlats1[:] = inlats1

outlons1[:] = inlons1

outvalue1[:, :] = indata1_set1[:]

ncout1.close()

return 0

def loadnetcdf_timed_tofile(infilename1, invarname1, intime1, outfilename1, outvarname1):

inc1 = nc.Dataset(infilename1)

inlatname1="lat"

inlonname1="lon"

inlats1=inc1[inlatname1][:]

inlons1=inc1[inlonname1][:]

indata1 = inc1[invarname1][:]

dim1=indata1.shape

nlat1=dim1[1]

nlon1=dim1[2]

indata_set1=indata1[intime1]

#img01=indata_set1

#img1.replace(np.nan, 0, inplace=True)

#img1 = np.where(isna(img10), 0, img10)

#where_are_NaNs = np.isnan(img1)

#img1[where_are_NaNs] = 99

#img1 = np.where(np.isnan(img01), 0, img01)

ncout1 = nc.Dataset(outfilename1, 'w', format='NETCDF4')

outlat1 = ncout1.createDimension('lat', nlat1)

outlon1 = ncout1.createDimension('lon', nlon1)

outlats1 = ncout1.createVariable('lat', 'f4', ('lat',))

outlons1 = ncout1.createVariable('lon', 'f4', ('lon',))

outvalue1 = ncout1.createVariable(outvarname1, 'f4', ('lat', 'lon',))

outvalue1.units = 'Unknown'

outlats1[:] = inlats1

outlons1[:] = inlons1

outvalue1[:, :] = indata_set1

ncout1.close()

return 0

- downscaler funktzione !

- def downscale_data_1(input_rasters,loadfiles,intime1,lon1,lon2,lat1,lat2,width1,height1,roto,areaname,sresultfilename1,sresultvarname1,infilenames1, outfilenames1,soutfilenames1,invarnames1,outvarnames1, soutvarnames1):

def downscale_data_1(input_rasters,loadfiles,intime1,lon1,lon2,lat1,lat2,width1,height1,roto,areaname,sresultfilename1,sresultvarname1,infilenames1, invarnames1):

global DS_method

global DS_show

global DS_input_log

debug=0

#if(input_rasters==1):

# os.system("del *.nc")

outfilenames1=[]

outvarnames1=[]

soutfilenames1=[]

soutvarnames1=[]

huba0=len(infilenames1)

for n in range (0,huba0):

sandersson1=invarnames1[n]

outvarnames1.append(sandersson1)

soutvarnames1.append(sandersson1)

## big raster??

outfilenames1.append("./process/"+areaname+"_"+invarnames1[0]+".nc")

if(input_rasters==1):

#preprocess_small_timed_raster(infilenames1[0], invarnames1[0], intime1, outfilenames1[0], outvarnames1[0], lon1, lon2, lat1, lat2,roto)

preprocess_small_single_raster(infilenames1[0], invarnames1[0], outfilenames1[0], outvarnames1[0], lon1, lon2, lat1, lat2,roto)

#quit(-1)

huba=len(infilenames1)

for n in range (1,huba):

ofnamee="./process/"+areaname+"_"+outvarnames1[n]+"_"+str(n)+".nc"

sofnamee="./process/"+areaname+"_"+outvarnames1[n]+"_"+str(n)+"_s.nc"

print(ofnamee)

print(sofnamee)

outfilenames1.append(ofnamee)

soutfilenames1.append(sofnamee)

if(input_rasters==1):

print("PP ",infilenames1[n])

preprocess_big_raster(infilenames1[n], invarnames1[n], outfilenames1[0], outfilenames1[n], soutfilenames1[n-1], outvarnames1[n], lon1, lon2, lat1, lat2, width1, height1, roto)

imgs=[]

pointsx=[]

pointsy=[]

pointsz=[]

mlats=[]

mlons=[]

spointsx=[]

spointsy=[]

spointsz=[]

simgs=[]

slats=[]

slons=[]

len1=len(outfilenames1)

for n in range(0,len1):

imgs.append(loadnetcdf_single_tomem(outfilenames1[n], "Band1"))

mlons.append(cache_lons1)

mlats.append(cache_lats1)

makepoints(imgs[n], mlons[n], mlats[n])

pointsx.append(cache_x)

pointsy.append(cache_y)

pointsz.append(cache_z)

len1=len(soutfilenames1)

for n in range(0,len1):

simgs.append(loadnetcdf_single_tomem(soutfilenames1[n], "Band1"))

slons.append(cache_lons1)

slats.append(cache_lats1)

makepoints(simgs[n], slons[n], slats[n])

spointsx.append(cache_x)

spointsy.append(cache_y)

spointsz.append(cache_z)

if(debug==1):

print("Specie habitation.")

load_xy("humanlgm.txt","Lon", "Lat")

klats1=cache_lats1

klons1=cache_lons1

spointszout=[]

ppointsx=[]

ppointsy=[]

ppointsz=[]

gdal_get_points_from_file(outfilenames1[1], invarnames1[1], klons1, klats1)

ppointsx.append(cache_x)

ppointsy.append(cache_y)

ppointsz.append(cache_z)

gdal_get_points_from_file(outfilenames1[2], invarnames1[2], klons1, klats1)

ppointsx.append(cache_x)

ppointsy.append(cache_y)

ppointsz.append(cache_z)

#gdal_get_points_from_file(outfilenames1[2], invarnames1[2], klons1, klats1)

#ppointsx.append(cache_x)

#ppointsy.append(cache_y)

#ppointsz.append(cache_z)

#bpoints1=ppointsz[0]

#apoints1=ppointsz[0]

#cpoints1=spointsz[0]

bpoints1=ppointsz[0]

apoints1=ppointsz[0]

cpoints1=spointsz[0]

spointszout.append(probability_single_var(apoints1, bpoints1, cpoints1))

bpoints1=ppointsz[1]

apoints1=ppointsz[1]

cpoints1=spointsz[1]

spointszout.append(probability_single_var(apoints1, bpoints1, cpoints1))

#bpoints1=ppointsz[2]

#apoints1=ppointsz[2]

#cpoints1=spointsz[2]

#spointszout.append(probability_single_var(apoints1, bpoints1, cpoints1))

odex1=0

sdataout=spointszout[0]*spointszout[1]

pointsxout1=spointsx[0]

pointsyout1=spointsy[0]

slonsout1=slons[0]

slatsout1=slats[0]

save_points_to_netcdf(sresultfilename1, sresultvarname1, slonsout1, slatsout1, sdataout)

plt.imshow( np.array(sdataout).reshape(len(slatsout1), len(slonsout1) ) )

plt.show()

return(1)

## main sektion of ds

sla=[]

len1=len(pointsz)

for n in range(1,len1):

sla.append(pointsz[n])

slb=[]

for n in range(0,len1-1):

print (n)

slb.append(spointsz[n])

apoints1=list(zip(*sla))

bpoints1=pointsz[0]

cpoints1=list(zip(*slb))

spointszout=[]

#if(DS_input_log==0):

#if(DS_input_log==1):

# spointszout.append(np.exp(random_forest_multiple_vars(apoints1, np.log(bpoints1), cpoints1)))

# spointszout.append(np.exp(gam_multiple_vars(apoints1, np.log(bpoints1), cpoints1)))

# spointszout.append(np.exp(linear_regression_multiple_vars(apoints1, np.log(bpoints1), cpoints1)))

#sdataout=average_tables(spointszout[0],spointszout[1])

if(DS_method==0):

spointszout.append(random_forest_multiple_vars(apoints1, bpoints1, cpoints1))

sdataout=spointszout[0]

if(DS_method==1):

spointszout.append(gam_multiple_vars(apoints1, bpoints1, cpoints1))

sdataout=spointszout[0]

if(DS_method==2):

spointszout.append(linear_regression_multiple_vars(apoints1, bpoints1, cpoints1))

sdataout=spointszout[0]

if(DS_method==77):

spointszout.append(random_forest_multiple_vars(apoints1, bpoints1, cpoints1))

spointszout.append(gam_multiple_vars(apoints1, bpoints1, cpoints1))

spointszout.append(linear_regression_multiple_vars(apoints1, bpoints1, cpoints1))

#sdataout=average_tables(spointszout[0],spointszout[1] )

#sdataout=average_tables(spointszout[0],spointszout[1], spointszout[2])

sdataout1=average_tables(spointszout[0],spointszout[1] )

sdataout2=average_tables(spointszout[1],spointszout[2] )

sdataout=average_tables(sdataout1, sdataout2 )

if(DS_method==88):

spointszout.append(random_forest_multiple_vars(apoints1, bpoints1, cpoints1))

spointszout.append(gam_multiple_vars(apoints1, bpoints1, cpoints1))

spointszout.append(linear_regression_multiple_vars(apoints1, bpoints1, cpoints1))

#sdataout=average_tables(spointszout[0],spointszout[1] )

#sdataout=average_tables(spointszout[0],spointszout[1], spointszout[2])

sdataout1=average_tables(spointszout[0],spointszout[1] )

sdataout2=average_tables(10*spointszout[1],1*spointszout[2]/10 )/10

sdataout=average_tables(sdataout1, sdataout2 )

pointsxout1=spointsx[0]

pointsyout1=spointsy[0]

slonsout1=slons[0]

slatsout1=slats[0]

save_points_to_netcdf(sresultfilename1, sresultvarname1, slonsout1, slatsout1, sdataout)

#plt.register_cmap(name='viridis', cmap=cmaps.viridis)

#plt.set_cmap(cm.viridis)

#cmaps = OrderedDict()

#kolormap='jet'

#kolormap='Spectral_r'

#kolormap='gist_rainbow_r'

#kolormap='BrBG'

#kolormap='rainbow'

kolormap='viridis_r'

if(DS_show==1):

plt.imshow(np.array(sdataout).reshape(len(slatsout1), len(slonsout1)) , cmap=kolormap)

plt.ylim(0, len(slatsout1))

plt.show()

return(0)

- attempt to post process rain in mountains!

- POST PROCESSORI warning experiment only

def manipulate_rainfall_data(demname, rainname, oname2):

# try to exaggerate rainfall in mountains

dem1=loadnetcdf_single_tomem(demname,"Band1")

rain0=loadnetcdf_single_tomem(rainname,"Rain")

rain1=np.flipud(rain0)

shape1=np.shape(rain1)

print(shape1)

dem2=np.ravel(dem1)

rain2=np.ravel(rain1)

len1=len(rain2)

#print (len1)

outta1=rain2*0

limith1=1200

for n in range(0,len1):

r=rain2[n]

o=r

d=dem2[n]

rk=d/limith1

if (d>limith1):

o=r*rk

outta1[n]=o

out00=np.reshape(outta1,shape1)

#out1=np.flipud(out00)

out1=out00

savenetcdf_single_frommem(oname2, "Rain", out1,cache_lats1, cache_lons1)

return(out1)

def match_raster(iname,matchname, oname):

loadnetcdf_single_tomem(iname, "Band1")

lon1=np.min(cache_lons1)

lon2=np.max(cache_lons1)

lat1=np.min(cache_lats1)

lat2=np.max(cache_lats1)

gdal_cut_resize_fromnc_tonc(iname, matchname, oname, "Rain",lon1, lon2, lat1, lat2)

- attempt to post process rain in mountains!

- POST PROCESSORI warning experiment 2 only

- enhances big rainfalls, diminishes small rainfalls

- based on y=kx+b

def manipulate_rainfall_data_2(dname1, dname2, oname2, ofset1, ofset2, k1):

# try to exaggerate rainfall in mountains

dat10=loadnetcdf_single_tomem(dname1,"Band1")

dat20=loadnetcdf_single_tomem(dname2,"Rain")

shape1=np.shape(dat10)

dee1=np.ravel(dat10)

dee2=np.ravel(dat20)

len1=len(dee1)

len2=len(dee2)

outta1=dee2*0

a=ofset1

b=ofset2

k=k1

for n in range(0,len1):

d1=dee1[n]

d2=dee2[n]

o0=(d1+d2)/2

o1=o0+b

o2=(o1-a)*k

o3=o2+a

outta1[n]=o3

out00=np.reshape(outta1,shape1)

#out1=np.flipud(out00)

out1=out00

if(DS_show==1):

kolormap='viridis_r'

plt.imshow(out1, cmap=kolormap)

#plt.ylim(0, len(slatsout1))

plt.show()

savenetcdf_single_frommem(oname2, "Rain", out1,cache_lats1, cache_lons1)

return(out1)

- main program

input_rasters=1

debug=0 ## human habitation test

- acquire basic data, loadfiles=1

loadfiles=1

intime1=1

- lon1=-4.5

- lon2=10.0

- lat1=42.0

- lat2=50.5

lon1=-180

lon2=180

lat1=-90

lat2=90

width1=300

height1=300

- jn

- width1=112

- height1=130

- JN WARNING

- width1=200

- height1=200

roto=1

areaname="planet"

sresultfilename1="./output/dskaled1.nc"

sresultvarname1="TSA"

infilenames1=[]

invarnames1=[]

infilenames1.append('tsa.nc')

- infilenames1.append('./indata/Map19_PALEOMAP_1deg_Late_Cretaceous_80Ma.nc')

infilenames1.append('dem1.nc')

infilenames1.append('lons.nc')

infilenames1.append('lats.nc')

- infilenames1.append('./process/accu_dem_1.nc')

- infilenames1.append('./process/accu_windir.nc')

invarnames1.append("tsa")

invarnames1.append("Band1")

invarnames1.append("Band1")

invarnames1.append("Band1")

RF_estimators1=400

- RF_features1=4

RF_features1=1

DS_method=1

DS_show=1

DS_input_log=0

downscale_data_1(input_rasters,loadfiles,intime1,lon1,lon2,lat1,lat2,width1,height1,roto,areaname,sresultfilename1,sresultvarname1,infilenames1, invarnames1)

print(".")

quit(-1)

Post-process downscaled file

- postprocess output of python dscaler

library(raster)

library(ncdf4)

library(rgdal)

library(png)

file1="./output/dskaled1.nc"

file2="./output/dskaled2.nc"

r1<-raster(file1)

crs(r1)<-"+proj=longlat +datum=WGS84 +no_defs +ellps=WGS84 +towgs84=0,0,0"

writeRaster(r1, file2, overwrite=TRUE, format="CDF", varname="tsa", varunit="m",

longname="tsa", xname="lon", yname="lat")

Lisanslama

- Şu seçeneklerde özgürsünüz:

- paylaşım – eser paylaşımı, dağıtımı ve iletimi

- içeriği değiştirip uyarlama – eser adaptasyonu

- Aşağıdaki koşullar geçerli olacaktır:

- atıf – Esere yazar veya lisans sahibi tarafından belirtilen (ancak sizi ya da eseri kullanımınızı desteklediklerini ileri sürmeyecek bir) şekilde atıfta bulunmalısınız.

- benzer paylaşım – Maddeyi yeniden karıştırır, dönüştürür veya inşa ederseniz, katkılarınızı orijinal olarak aynı veya uyumlu lisans altında dağıtmanız gerekir.

Altyazılar

Bu dosyada gösterilen öğeler

betimlenen

Vikiveri ögesi olmayan bir değer

25 Aralık 2021

image/png

Dosya geçmişi

Dosyanın herhangi bir zamandaki hâli için ilgili tarih/saat kısmına tıklayın.

| Tarih/Saat | Küçük resim | Boyutlar | Kullanıcı | Yorum | |

|---|---|---|---|---|---|

| güncel | 18.34, 17 Ocak 2022 |  | 2.240 × 1.488 (1,31 MB) | Merikanto | Update |

| 17.35, 25 Aralık 2021 |  | 2.192 × 1.472 (661 KB) | Merikanto | Uploaded own work with UploadWizard |

Dosya kullanımı

Bu görüntü dosyasına bağlantısı olan sayfalar: